CHIBA

CHIBA

Name of the competing system: M2HR system

Name and email of the responsible person: Myagmarbayar Nergui ( myagaa(at)graduate.chiba-u.jp)

Date and place: 6/22/2013, Chiba University Japan

Data sources

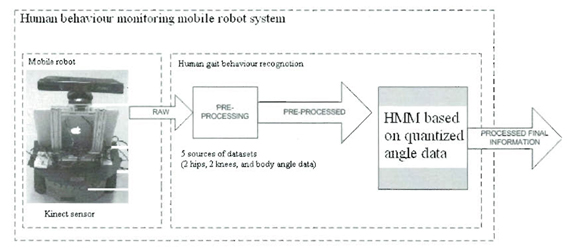

The following diagram shows that data collection and data processing of out human behavior monitoring robot system. We collected 5 different angle (2 hips, 2 knees and body angle) data from the calculation based on RGB-D image from Kinect sensor, while mobile robot tracking and following human subject from side viewpoints. Then, we preprocessed angle data using quantization method; Hidden Markov Model (HMM) is applied based on the quantized data. Applying HMM, first we collect training data from experiments of different activities, then we test the activities based on the calculated parameters from training process. Finally, our system output is states of the predefined activiteies.

For each datasource the following table is fulfilled:

Name | Rigth hip angle data |

Description of the information measured | Calculated from shoulder center point, right hip point and right knee points extracted from RGB.D image of kinect sensor (Extracted joint points from RGB-D image, which is captured image of tracked human subject attached color markers to his/her lower limb joints). |

Units | Degrees |

Example values | 0-180 degrees |

Frequency of generated data in operation | FPS of calculation of angle data is around 13-15. |

Name | Left hip angle data |

Description of the information measured | Calculated from shoulder center point, left hip point and left knee points extracted from RGB.D image of kinect sensor (Extracted joint points from RGB-D image, which is captured image of tracked human subject attached color markers to his/her lower limb joints). |

Units | Degrees |

Example values | 0-180 degrees |

Frequency of generated data in operation | FPS of calculation of angle data is around 13-15. |

Same as above for Right knee angle data and left knee angle data.

Name | Body angle data |

Description of the information measured | Calculated from shoulder center point, hip center point and virtual ground point extracted from RGB.D image of kinect sensor (Extracted joint points from RGB-D image, which is captured image of tracked human subject attached color markers to his/her lower limb joints). |

Units | Degrees |

Example values | 0-180 degrees |

Frequency of generated data in operation | FPS of calculation of angle data is around 13-15. |

DataSets

After, for instance, 30 minutes operation the competitor generates the following data sets:

- An array of arrays that include: [time, body angle, left knee angle, right knee angle, left hip angle, right hip angle], every 100ms.

- Images data set

Files:

- A file containing these samples in mat format

- Images got from Kinect.

Devices used during benchmark

Device name | Type | Position in LLab (x,y,z) in meters | Position in user | Input | Output | Communication means to your system |

iRobot Create robot (shared control, such as autonomous and manual, controlled by joystick) | Presence | Track and follow by actor (we use bigger than this robot usually, but for this competition we bought iRobot Create. We are now deploying our software on it, but we are not sure how it will work, if possible, please provide us enough space to track and follow actor ) | Nearby actor, and side viewpoint tracking | Kinect sensor data based on Human motion and obstacles | Control robot motion | Serial port |

2 Kinect sensors | Presence | - | At Robot | Person moves | Image | Serial port |

Color mark Support (not a device but a garment) | Presence |

| 2 Knee, 2 ankle joints |

|

|

|