Track 1 – Indoor Localization and Tracking for AAL

Technical annex of indoor localization and tracking – EvAAL Competition

Important: this version of the annex will be refined with the feedback of the competitors. Refined versions will be timely distributed to the competitors by means of the contest(at)evaal.aaloa.org mailing list.

Technologies

Each team should implement a localization system to cover the area of the Living Lab, without limits to the number of devices. Localization systems can be based on different technologies, including (but not limited to): measurements on radio communications (for example RSS, angle of arrival etc.) based on standard radios (such as IEEE 802.11, IEEE 802.15.4, or IEEE 802.15.1), RFID, or ultrawideband, infrared sensors, active infrared break beams, ultrasound, camera and optical systems etc.. The proposed systems may also include combinations of different technologies. Other technologies may be accepted provided they are compatible with the constraints of the hosting living lab. To this purpose competitors wishing to check such compatibility may inquire with the organizers by e-mail ( info(at)evaal.aaloa.org). Moreover, this year competition includes the possibility of exploiting the context information provided by the Living Lab (such as opening/closing doors, switching on/off the light etc..), in order to refine the proposed localization system (details are given in the refinement of this document).

The teams should consider possible restrictions related to the availability of power plugs, cable displacement, attachment of devices to walls/furniture in the Living Lab, etc. The requirements of the proposed localization systems should be communicated at an early stage in order to make the necessary on-site arrangements. However the Technical Program Committee (TPC) may exclude localization systems if their deployment is incompatible with the living lab constraints.

Competitors are requested to intergrate their solution with our logging and benchmarking system. The integration will be guided in details and a competitor's integration package will be delivered for the purpose. The actual details of this integration are given in the refinement of this document.

Benchmark Testing

The score for measurable criteria for each competing artefact will be evaluated by means of benchmark tests (prepared by the organizing committee). For this purpose each team will be allocated a precise time slot at the living lab, during which the benchmark tests will be carried out. The benchmark consists of a set of tests, each of which will contribute to assessment of the scores for the artefact. The EC will ensure that the benchmark tests are applied correctly to each artefact. The evaluation process will also assign scores to the artefact for the criteria that cannot be assessed directly through benchmark testing.

When both benchmark testing and EC evaluation have been completed, the overall score for each artefact will be calculated using the weightings shown above. All final scores will be disclosed at the end of the competition, and the artefacts ranked according to this final score.

The time slot for benchmark testing is divided into three parts. In the first part, the competing team will deploy and configure their artefact in the living lab. This part should last no more than 60 minutes. In the second part, the benchmark will be applied. During this phase the competitors will have the opportunity to perform only short reconfigurations of their systems. In the last part the teams will remove the artefact from the living lab in order to enable the installation of the next competing artefact.

Competing teams which fail to meet the deadlines in parts 1 and 3 will be given the minimum score for each criterion related to the benchmark test. Furthermore, artefacts should be kept active and working during the whole second part. If benchmark testing in the second part is not completed, the team will be awarded a minimum score for all the missing tests.

During the second part, the localization systems will be evaluated in three phases:

Phase 1. In this phase each team must locate a person inside an Area of Interest (AoI). The AoI in a typically AAL scenario could be inside a specific room (bathroom, bedroom), in front of a kitchen etc. (see refinement for the official AoIs)

Phase 2. In this phase a person that moves inside the Living Lab must be located and tracked (we plan only 2D localization and tracking here). During this phase only the person to be localized will be inside the Living Lab. In this phase each localization system should produce localization data with a frequency of 1 new item of data every half a second (this will be also used to evaluate availability). The path followed by the person will be the same for each test, and it will not be disclosed to competitors before the application of the benchmarks. The environment will be made as much as possible similar to a house. This means that, if possible, there will typical appliances on, nearby wifi AP on, cellular phones on etc. In order to evaluate the accuracy of the competing artefacts, the organizers will compare the output of the artefacts with a reference localization system. Currently, the number and lengths of the paths used in the tests, and the number and position of AoIs. Details will be communicated to the competitors in advance.

- Phase 3. This phase is organized as the former phase, but the competing artefacts will be evaluated in the presence of another person who moves inside the Living Lab. Only one person must be localized by the competing artefacts, the second one will follow a predefined path unknown to the competitors.

1. There is no requirement of localising the disturber.

2. Only the actor can carry equipment provided by the competitor.

3. The actor will start moving at least 5 seconds before the disturber.

4. Both the actor and the disturber may generate contextual events: if any, the first such event will be generated by the actor.

5. When the disturber generates an event, if any, the actor will be at least 2 metres away.

Evaluation criteria:

In order to evaluate the competing localization systems, the TPC will apply the evaluation criteria listed in this document. For each criterion, a numerical score will be awarded. Where possible the score will be measured by direct observation or technical measurement. Where this is not possible, the score will be determined by the Evaluation Committee (EC). The EC will be composed of some volunteer members of the Technical Program Committee TPC, and will be present during the competition at the Living Lab.

The overall score will be estimate summing each weighted evaluation criteria that are:

1. Accuracy [weight 0.25] – each produced localization sample is compared with the reference position and the error distance is computed.

During the first phase of the competition the user will stop (after a predefined walk equal for all competitors) 30 seconds in each Area of Interest (AoI). Accuracy in this case will be measured as the fraction T of time in which the localization system provides the correct information about:

- Presence of the user in a given AoI

- Absence of the user from any AoI

The score is given by:

Accuracy score = 10*T

For the last 2 phases, the stream produced by competing systems will be compared against a logfile of the expected position of the user. Specifically, we will evaluate the individual error of each measure (the Euclidian distance between the measured and the expected points), and we will estimate 75th percentile P of the errors. In order to produce the score, P will be scaled in the range [0,10] according to the following formula:

Accuracy score =10 if P

Accuracy score =4*(0.5-P)+10 if 0,5m < P < 2 m

Accuracy score =2*(4-P) if 2 m < P < 4 m

Accuracy score = 0 if P > 4 m

The final score on accuracy will be the average between the scores obtained in all the phases.

2. Installation complexity [weight 0.15] – a measure of the effort required to install the AAL localization system in a flat, measured by the evaluation committee as a function of the person-minutes of work needed to complete the installation (The person-minutes of the first installer will be fully included; the person-minutes of any other installer will be divided by 2)

The time T is measured in minutes from the time when the competitors enter the living lab to the time when they declare the installation complete (no further operations/configurations of the system will be admitted after that time), and it will be multiplied by the number of people N working on the installation. The parameter T*N will be translated in a score (ranging from 0 to 10) according with the following formula:

Installation Complexity Score = 10 if T*N <=10

Installation Complexity Score = 10 * (60-T*N) / 50 if 60 = 10

Installation Complexity Score = 0 if T*N >60

3. User acceptance [weight 0.25] – expresses how much the localization system is invasive in the user’s daily life and thereby the impact perceived by the user; this parameter will be evaluated by the evaluation committee following predefined criteria pubished in the refinement of this annex.

4. Availability [weight 0.2] – fraction of time the localization system was active and responsive. It is measured as the ratio A between the number of produced localization data and the number of expected data: competing systems are expected to provide one sample every half a second. Excess samples are discarded.

The values of availability A will be translated into a score (ranging from 0 to 10) according to the following formula:

Availability score = 10 * A

5. Interoperability with AAL systems [weight 0.15] – This parameter evaluates the degree of interoberability of the solution in terms of openness of the SW, adoption of standards for both SW and HW, replaceability of parts of the solution with other ones. The actual implementation of the metric is described in the refinement of this annex.

Setting

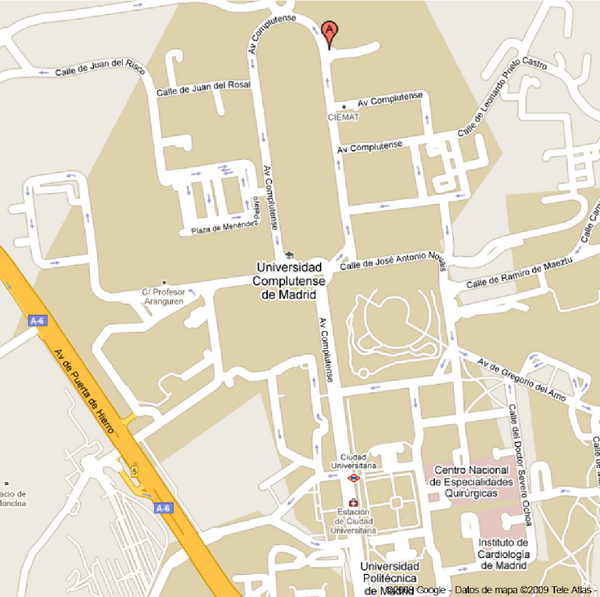

The Smart House Living Lab is located at the Escuela Técnica Superior de Ingenieros de Telecomunicación of the Universidad Politecnica de Madrid (ETSIT UPM), in Madrid, Spain. (www.etsit.upm.es)

The complete address is:

Escuela Técnica Superior de Ingenieros de Telecomunicación (ETSIT)

Avenida Complutense nº 30

Universidad Politécnica de Madrid (UPM)

Ciudad Universitaria s/n

28040 Madrid

GPS: 40.45236386045641,-3.727326393127441

The site is easily reachable with the Madrid's underground system, the nearest metro station is Ciudad Universitaria.

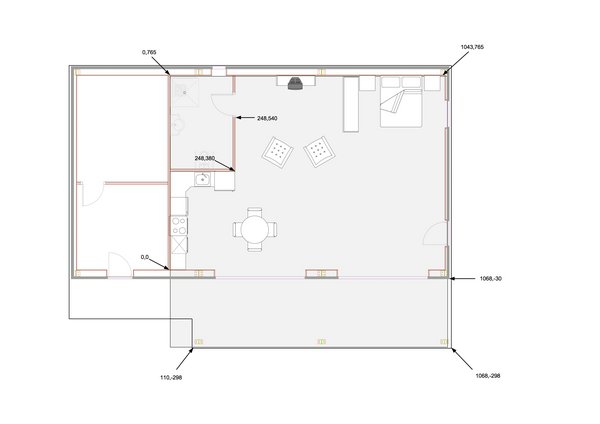

The localizable area is identified by the grey area of the figure above.

Infrastructure

Rooms:

- Control room with unidirectional vision glass to observe the common space

- 3D immersive room

- Bathroom

- Porch with ceiling outside the common space and control room. With garden and pool with fish and waterfall type fountain

- Common space that includes:

- Kitchen

- Living room

- Bedroom

Only bathroom, common space and the porch will be localizable.

The actual localizable space and its reference coordinates can be downloaded here.

Ceiling, floor and walls

- Drop ceiling

- Floor with distributed hidden "holes" with plugs

- Detachable wall modules

Ceiling has not a fixed height.

The lowest part,where the porch and the entrance are, is 2,32 meters high.

From the porch to the first girder the ceiling will be inclinated until reaching the height of 2,62 meters.

From that point on the ceiling is 2,62 meters high.

The transversal diagram of the structure is visible here.

The best option for fixing appliances to celinig and walls is blue tack.

We have also successfully used adhesive velcro in other occasions.

In case, it is necessary, the tiles of the ceiling can be lifted. Tiles are 57 cm x 57 each.

Communication

- Ethernet sockets

- WIFI

- High speed internet connection

Competitors will have to send their localization samples through the wifi or the ethernet connection.

Sensors and actuators connected rhrough KNX

- 2 Flood sensor (in kitchen and bathroom)

- 1 Smoke sensor (next to the kitchen)

- 1 Fire sensor (next to the kitchen)

- 6 Blinds actuator (in bedroom, entrance/living room and kitchen)

- 4 Doors actuator (entrance, bathroom, living room and kitchen)

- 2 Light sensor (in bedroom and living room)

- 1 Alarm detection pull cord (in bathroom)

- 4 Motion sensor (in entrance, living room, bedroom and bathroom)

- 6 Magnetic contact sensor (in entrance door, living room door, bathroom door, kitchen door, bedroom window and bathroom window)

- 2 HVAC systems (in ceiling)

Competitor will receive contextual events coming from the light switches and a stationary bike (which is NOT KNX). The coordinates of these appliances is published here.

Other equipment

- 1 movable IP PTZ camera

- 5 Environmental microphones distributed across the user dependencies

- 10 Ceiling speakers distributed across the user dependencies

- 3 Active RFID readers pluggable to Ethernet sockets

- Electronic stationary bicycle with embedded computer and actvity monitor.

Computing

- 4 Pannel PCs

- 1 Server for automotation purposes

Software:

- Loquendo ASR Voice recognition software (English and Spanish)

- Loquendo TTS voices (English and Spanish)

- Prosyst OSGI Smart Home SDK

Household appliances

- Home appliances (Fridge, oven, cook and washing machine automated with Maior-Domo® by Fagor).

- 37 inches flat television

- Sony's Aibo personal entertainment robot dog

Photo of the kitchen of the Living Lab